Understanding Display "Resolution" (Retina display)

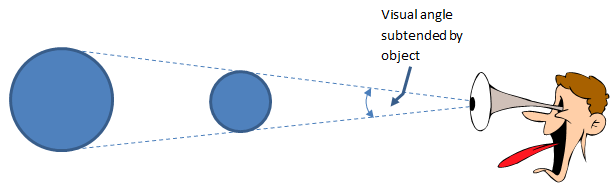

When I first heard the "Retina display" of the iPhone explained, the perplexed reaction I had was "what for?" By the way, "Retina display" is just a marketing term for a density of greater than 300dpi. Display area is probably the most expensive piece of real estate in the world after the Disney Store in New York city's Times Square. When you have a large spreadsheet to analyze, every pixel is priceless. Why would you want to waste 960x640 of screen real estate at 326dpi? It's not about discernible (read snobby) people being able to tell the dots when the display density is below 200dpi. For paper printouts, yes, you would want 300dpi or better. But for an electronic display which is refreshed at 30 times a second? For an electronic display showing moving images or video? My main beef with high resolution displays on cell phones is that the density is too high. The density should be reduced to make the display more readable when each